|

|

TinyLlama - a small yet effective open source LLM model |

I wanted a platform to support my upskilling efforts in generative AI, machine learning and natural language processing. Finding one that met my needs took me some time, labor and a surprisingly modest amount of cash.

My first stop along this journey was discovering a software stack within the Docker Hub repository that provided the necessary components to accelerate NLP application development. This specific open source project is called 'docker/genai-stack'

The GenAI stack

|

|

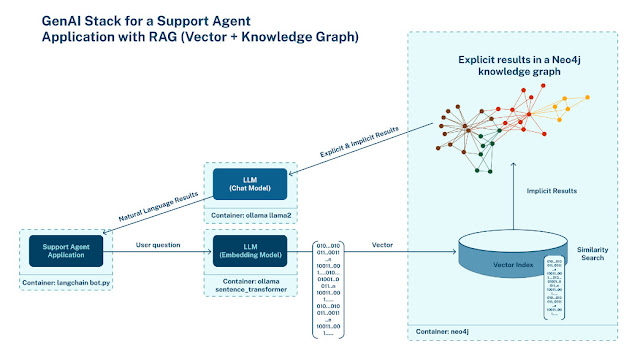

The GenAI stack architecture |

The genai-stack offers the following resources to support generative AI application development:

- neo4j - a docker container from Neo4j of a vector store database critical for RAG application development.

- Ollama - the Ollama project provides a docker container that exposes an API to applications, allowing interactions with open source large language models. Open source LLM models execute locally on the host computers and avoid sending private data to third-party services, i.e., OpenAI. Ollama provides a library of LLM models. Developers select one, then the genai-stack pulls, runs, and exposes the selected model's resources via API. Currently the Ollama docker container supports Linux and MacOS hosts; support for Windows hosts is on the roadmap.

- CPU execution vs. GPU acceleration - The Ollama container can recognize the presence of a GPU installed on the host and leverage it for accelerated LLM processing. Optionally, the developer can select CPU execution. The genai-stack allows this selection via command line switches when starting the stack.

- Container orchestration - Since the genai-stack packages several containers, orchestration is essential. The stack achieves this via docker-compose, embedding health checks to test container health and error checks to abort the application build and start-up processes.

- Closed LLM selection - The developer can utilize closed LLM models from AWS and OpenAI if desired.

- Embedding model choice - Similar to providing a choice of LLM, the genai-stack offers the developer a choice between open and closed source embedding models. Ollama supports closed embedding models from OpenAI and AWS, open models from Ollama, and, in addition, the SentenceTransformer model from SBert.net.

- LangChain API - given that the stack aggregates various LLM and embedding model resources, the stack abstracts this complexity by providing a standard API interface. The project leverages the LangChain Python framework, providing the developer a wide choice of programming APIs to meet various use cases.

- Demo applications - the genai-stack provides several demonstration applications. One that I found very valuable is the pdf_bot application. It implements the common 'Ask Your PDF' use case out of the box.

I needed a hardware update

I always favor repurposing old hardware for new use cases, versus buying new. For example, until I embarked on this journey, I was using an ancient HP laptop initially designed for Windows 7. I loaded Ubuntu onto it long ago, primarily for a web browser and email reader. But could it do the job with this project?

Naively, I loaded the genai-stack onto it. Executing basic LLM queries was painfully slow because of the vintage Intel Core i3 CPU (1st generation!) and lack of an installed GPU.

A further constraint: the vintage CPU lacked the necessary AVX instruction and thus could not run the Ollama container.

Time for an upgrade!

Returning from a recent business trip, I was sitting in the Denver, CO, airport, waiting for a flight back to the metro NYC area. I was browsing through Amazon when a refurbished Dell Optiplex 9020 server caught my eye.

|

|

Dell Optiplex 9020 Mini Tower |

The listed price at USD 250 was modest. But did its CPU support that AVX instruction?

The Amazon post indicated that the model uses the Intel i7 Core processor. The Dell published tech specs indicated Dell built these 9020 systems using the Intel 4th generation Haswell processor, which did, indeed, support the AVX (and AVX2) instructions.

With 32 GB of RAM and 1 TB of SSD, I felt it had sufficient dimensions and was a very economical choice.

The need for speed

Not content without having the option to accelerate LLM queries, I set out looking for an NVIDIA GPU for this server. There were two main physical limitations, one I could easily overcome; the other set a constraint on the specific GPU I eventually bought.

|

|

Dell Optiplex 9020 Mini Tower internals |

- The server's physical layout - the SSD mounting cages physically constrain the area around the PCI expansion, limiting me to purchasing older, shorter NVIDIA GPU cards vs. the more modern, physically larger GPU.

- The stock power supply capacity - Dell built the server I purchased with a power supply rated 290 Watts. More reading and research into GPU options revealed that any GPU I did buy would add to the power draw, and the stock power supply was under-dimensioned.

I found this post in the Dell community forum which guided me to a power supply upgrade and the final selection of an NVIDIA GeForce 1050 Ti GPU. I purchased the higher capacity power supply from NewEgg and a used GPU from eBay.

|

|

NVIDIA GeForce 1050 Ti GPU |

How did the server perform?

Quite well, actually.

I built the system with the upgraded power supply and GPU, then loaded Ubuntu LTS with the NVIDIA GPU drivers. I forked the upstream project, made a few modifications, and pushed the fork to my GitHub repository.

|

|

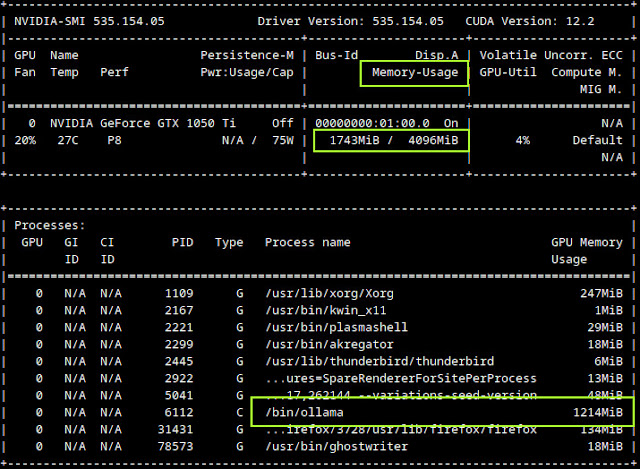

TinyLlama's small footprint fits inside this GPU |

- Ollama with GPU - The Ollama project provides a wide selection of models, varying in size and fine-tuned to various use cases. Given the modest dimensions of the 1050 Ti GPU, I was delighted to find the TinyLlama model fit within the GPU memory space. Even though TinyLlama has a small memory footprint, it performs well regarding response speed and generated output, as observed with the pdf_bot application using its web browser interface.

- pdf_bot.py modifications - After I forked the project, I modified the pdf_bot.py to allow scanning, parsing and vectorizing Microsoft Word .docx files. In addition to querying your PDF documents with natural language queries, I can now make similar queries against MS Word documents.

- api.py modifications - I modified the genai-stack provided api.py module, pointing it to select the vectorized documents from neo4j and away from another demo application provided by the upstream project.

- pdf_bot_cli.sh creation - Wanting a command line interface to supplement the supplied web interface, I created a bash shell wrapper script for the API. The shell script executes curl, obtains the JSON API responses and provides a clean output on the terminal console. I also created a command line option to feed a list of queries from an input text file for batch processing several queries against a vectorized document, which is quite handy. I quickly created the shell script; it is underperforming in terms of its response time. I intend to build a better-performing Python script that has improved streaming.

Where I finally landed

Overall, I spent approximately USD 450 on this project, which met my original

goals: owning a hardware platform, capable of Generative AI

and NLP application development using open source LLM models, to assist me in my upskilling efforts. I am also satisfied because I reused

aftermarket, aging hardware for modern application development with surprising

performance characteristics. This approach fits with my personal ethos of being environmentally responsible. I achieved this through implementing the concept of "reduce, reuse and recycle".

If you have further questions on this project and what it can do, feel free to reach out via InMail on LinkedIn.